What is SpeakRight?

SpeakRight is an open-source Java framework for writing speech recognition applications in VoiceXML.Unlike most proprietary speech-app tools, SpeakRight is code-based. Applications are written in Java using SpeakRight's extensible classes. Java IDEs such as Eclipse provide great debugging, fast Java-aware editing, and refactoring. Dynamic generation of VoiceXML is done using the popular StringTemplate templating framework. Read more...

Monday, February 26, 2007

First Release!

This is my first open-source project. It's been an intense learning curve, but getting this far is due to some fine OSS software: Eclipse, Subversion, SourceForge, StringTemplate, Junit, XMLUnit, Skype, and the Voxeo community.

Saturday, February 24, 2007

Control Flow, Errors, and Event Handling

Execution of a sequence of flow objects is done by a flow object having a list of sub-flow objects. Every time its getNext method is called it returns the next object from the list.

Conditional flow is done by adding logic in getNext, to return one flow object or another based on some condition.

Looping is done by having getNext return its sub-flows more than once, iterating over them multiple times.

Error Handling

Callflows can have errors, such as user errors (failing to say anything recognizable) and application errors (missing files, db error, etc). SpeakRight manages error handling separately from the getFirst/getNext mechanism. getNext handles the error-free case. If an error occurs then of the IFlow error handling methods is called.

IFlow OnNoInput(current, results); //app was expecting input and none was provided by the user

IFlow OnException(current, results); //a generic failure such as exception being thrown.

IFlow OnDisconnect(current, results); //user terminated the interaction (usually by hanging up the phone. how in multimodal?)

IFlow OnHalt(current, results); //system is stopping

IFlow OnValidateFailed(current, results);

Note that a number of things that aren't really errors are handled this way. The goal is to keep the "nexting" logic clean, and handle everything else separately.

Errors are handled in a similar manner as exceptions; a search up the flow stack is done for an error handler. If the current flow doesn't have one, then its parent is tried. It's an runtime error if an error handler is not found.

The outermost flow is usually a class derived from SRApp. SRApp provides error handlers with default behaviour. They play a prompt indicating that a problem has occurred, and transfers the call to an operator.

Catch and Throw

The basic flow of control in a SpeakRight app is nesting of flow objects. These behave like subroutine calls; when the nested flow finishes, the parent flow resumes execution. Sometimes a non-local transfer of control is needed. SpeakRight supports a generic throw and catch approach. A flow can throw a custom flow event, which MUST be caught by a flow above it in the flow stack.

return new ThrowFlowEvent("abc");

and the catch looks like any other error handler

IFlow OnCatch(current, results, thrownEvent);

Note: like all other handlers a flow event can catch its own throw. May seem weird but this lets developers move code around easily.

Some control flow is possible in execute. If you want a flow to branch if a db error happens, then do this. However, in this case a flow object can not catch its own flow event, since that would cause Execute to be called again, and infinite recursion...

Update: See also Optional Sub-Flow Objects

GotoUrlFlow

The GotoUrlFlow flow object is used to redirect the VoiceXML browser to an external URL. It is used to redirect to static VoiceXML pages or to another application.

Internal Architecture

- Application code

- Web server

- VoiceXML browser

- VoiceXML platform

- Telephony hardware, VOIP stack

SpeakRight lives in application code layer, typically in a servlet. The SpeakRight runtime dynamically generates VoiceXML pages, one per HTTP request. Between requests, the runtime is stateless, in the same sense of a "stateless bean". State is saved in the servlet session, and restored on each HTTP request.

The SpeakRight framework is a set of Java classes specifically designed for writing speech rec applications. Although VoiceXML uses a similar web architecture as HTML, the needs of a speech app are very different (see Why Speech is Hard TBD).

SpeakRight has a Model-View-Controller architecture (MVC) similar to GUI frameworks. In GUIs, a control represents the view and controller. Controls can be combined using nesting to produce larger GUI elements. In SpeakRight, a flow object represents the view and controller. Flow objects can be combined using nesting to produce larger GUI elements. Flow objects can be customized by setting their properties (getter/setter methods), and extended through inheritance and extension points. For instance, the confirmation strategy used by a flow object is represented by another flow object. Various types of confirmation can be plugged-in.

Flow objects contain sub-flow objects. The application is simply the top-level flow object.

Flow objects implement the IFlow interface. The basics of this interface are

IFlow getFirst();getFirst returns the first flow object to be run. A flow object with sub-flows would return its first sub-flow object. A leaf object (one with no sub-flows) returns itself. (See also Optional Sub-Flow Objects)

IFlow getNext(IFlow current, SRResults results);

void execute(ExecutionContext context);

getNext returns the next flow object to be run. It is passed the results of the previous flow object to help it decide. The results contain user input and other events sent by the VoiceXML platform.

In the execute method, the flow object renders itself into a VoiceXML page. (see also StringTemplate template engine).

Execution uses a flow stack. An application starts by pushing the application flow object (the outer-most flow object) onto the stack. Pushing a flow object is known as activation. If the application object's getFirst returns a sub-flow then the sub-flow is pushed onto the stack. This process continues until a leaf object is encountered. At this point all the flow objects on the stack are considered "active". Now the runtime executes the top-most stack object, calling its execute method. The rendered content (a VoiceXML page) is sent to the VoiceXML platform.

When the results of the VoiceXML page are returned, the runtime gives them to the top-most flow object in the stack, by calling its getNext method. This method can do one of three things:

- return null to indicate it has finished. A finished flow object is popped off the stack and the next flow-object is executed.

- return itself to indicate it wants to execute again.

- return a sub-flow, which is activated (pushed onto the stack).\

Table of Contents

- What is SpeakRight?

- The Benefits of a Code-Based Approach

- Features

- FAQ

- Tutorial, Simpsons Demo

- SourceForge, Download

SpeakRight documentation

- Getting Started

- Tutorial and Simpsons Demo

- Javadocs

- Architecture

- Internal Architecture

- Servlets

- Extension Points

- Performance

- StringTemplate template engine

- Initialization

- Flow Objects

- Prompts, Grammars

- Prompts in SROs

- DTMFOnlyMode

- Internationalization

- NBest

- Call Control

- Control Flow, Errors and Event Handling

- HotWords

- SpeakRight Reusable Objects (SROs)

- VoiceXML

- Testing

- Project Plan

- Wish List

- Contributors

- Powered By

Sunday, February 18, 2007

Grammars

SpeakRight supports three types of grammars: external grammars (referenced by URL), built-in grammars, and inline grammars (which use a simplified GSL format). Grammars work much like prompts. You specify a grammar text, known as a gtext, that uses a simple formatting language:

- (no prefix). The grammar text is a URL. It can be an absolute or relative URL.

- inline: prefix. An inline grammar. The prefix is followed by a simplified version of GSL, such as "small medium [large big] (very large)".

- builtin: prefix. One of VoiceXML's built-in grammars. The prefix is followed by something like "digits?minlength=3;maxlength=9"

When a flow object is rendered, the grammars are rendered using a pipeline, that applies the following logic:

- check the grammar condition. If false then skip the grammar.

- parse an inline grammar into its word list

- parse the builtin grammar

- convert relative URLs into absolute URLs

The grammar text is a URL. It can be an absolute URL (eg. http://www.somecompany.com/speechapp7/grammars/fruits.grxml), or a relative URL. Relative URLs (eg. "grammars/fruits.grxml") are converted into absolute URLs when the grammar is rendered. The servlet's URL is currently used for this.

The grammar file extension is used to determine the type value for the grammar tag

- ".grxml" means type="application/srgs+xml"

- ".gsl" means type="text/gsl"

- all other files are assumed to be ABNF SRGS format, type="application/srgs"

Built-In Grammars

TDB. Built-ins are part of VoiceXML 2.0, but optional. They are also intended for prototyping, and it's recommended that applications use full, properly tuned grammars.

Inline Grammars

GSL is (I believe) a propietary Nuance format. SpeakRight uses a simplified version that currently only supports [ ] and ( ).

A single utterance can contain one or more slots. The simplest type of directed dialog VUIs use single slot questions, such as "How many passengers are there?". SR only supports single slot for now.

Grammar Types

There are two types of grammars (represented by the GrammarType enum).

- VOICE is for spoken input

- DTMF is for touchtone digits

DTMF Only Mode

Speech recognition may not work at all in very noisy environments. Not only will recognition fail, but prompts may never play due to false barge-in. For these reasons, speech applications should be able to fall back to a DTMF-only mode. This mode can be activated by the user by pressing a certain key, usually '*'. Once activated, SpeakRight will not render any VOICE grammars. Thus the VoiceXML engine will only listen for DTMF digits.

Slots

A grammar represents a series of words, such as "A large pizza please". The application may only care about a few of the words; here, the size word "large" is the only word of importance to the app. These words are attached tonamed return values called slots. In our pizza example, a slot called "size" would be bound to the words "small", "medium", or "large". Any of those words would fill the slot.

Slots define the interface between a grammar and a VoiceXML field. The field's name (shown below) defines a slot that grammar must fill in order for the field to be filled.

Any grammar that fills the slot "size" can be used.

A single utterance can fill multiple slots, as in "I would like to fly to Atlanta on Friday."

SpeakRight doesn't yet support multi-slot questions..

Prompts

Here is a basic prompt that plays some text using TTS (text-to-speech):

"Welcome to Inky's Travel".

PTexts are Java strings. Here's another prompt:

"Welcome to Inky's Travel. {audio:logo.wav}"

This prompt contains two items: a TTS phrase and an audio file. Items are delimited by '{' and '}'. The delimiters are optional for the first item. This is equivalent:

"{Welcome to Inky's Travel. }{audio:logo.wav}"

PTexts can contains as many items as you want. They will be rendered as a prompt

<prompt>Welcome to Inky's Travel. <audio src="http://myIPaddress/logo.wav"></audio>

</prompt>

For convenience, audio items can be specified without the "audio:" prefix. The following is equivalent to the previous prompt. The prefix is optional if the filename ends in ".wav" and contains no whitespace characters.

"{Welcome to Inky's Travel. }{logo.wav}"

You can add pauses as well using "." inside an item. Each period represents 250 msec. Pause items contain only periods (otherwise they're considered as TTS). Here's a 750 msec pause.

"{Welcome to Inky's Travel. }{...}{logo.wav}"

Model variables can be prompt items by using a "$M." prefix. The value of the model is rendered.

"The current price is {$M.price}"

"You chose {$INPUT}"

Also fields (aka. member variables) of a flow object can be items by wrapping them in '%'. If a flow class has a member variable: int m_numPassengers; then you can play this value in a prompt like this:

If you're familiar with SSML then you can use raw prompt items, that have a "raw:" prefix. These are output as it, and can contain SSML tags.

Lastly, there are id prompt items, which are references to an external prompt string in an XML file. This is useful for multi-lingual apps, or for changing prompts after deployment. See Prompt Ids and Prompt XML Files.

"id:sayPrice"

"audio:" audio prompts - "M$." model values

- "%value%" field values (of currently executing flow object)

- ".." pause (250 msec for each period)

- "raw:" raw SSML prompts

- "id:" id prompts

- TTS prompt (any prompt item that doesn't match one of the above types is played as TTS)

Prompt Conditions

By default, all the prompts in a flow object are played. However there are occasions when the playing of a prompt needs to be controlled by a condition. Conditions are evaluated when the flow object is executed; if the condition returns false the prompt is not played.

| Condition | Description |

| none | always play prompt |

| PlayOnce | only play the first time the flow is executed. If the flow is re-executed (the same flow object executes more than once in a row), don't play the prompt. PlayOnce are useful in menus where the initial prompt may contain information that should only be played once. |

| PlayOnceEver | only play once during the entire session (phone call). |

| PlayIfEmpty | only play if the given model variable is empty (""). Useful if you want to play a prompt as long as something has not yet occured. |

| PlayIfNotEmpty | only play if the given model variable is not empty ("") |

Prompt Rendering

Prompts are rendered using a pipeline of steps. The order of the steps has been chosen to maximize usefulness.

| Step | Description |

| 1 | Apply condition. If false then return |

| 2 | Resolve ids. Read external XML file and replace each prompt id with its specified prompt text |

| 3 | Evaluate model values |

| 4 | Call fixup handlers in the flow objects. The IFlow method fixupPrompt allows a flow object to tweak TTS prompt items |

| 5 | Merge runs of of TTS items into a single item |

| 6 | Do audio matching. An external XML file defines TTS text for which an audio file exists. The text is replaced with the audio file. |

The result is a list of TTS and/or audio items that are sent to the page writer.

Audio matching

Audio matching is a technique that lets you use TTS for the initial speech app development. Once the app is fairly stable, record audio files for all the prompts. Then you ceate an audio matching xml file that lists each audio file and the prompt text it replaces. Now when the SpeakRight application runs, matching text is automatically replaced with the audio file. No source code changes are required.

The match is a soft match that ignores case and punctuation. That is a prompt item "Dr. Smith lives on Maple Dr." would match an audio-match text of "dr smith lives on maple dr".

Audio matching works at the item level. Do we need to suport some tag for spanning multi items???

Saturday, February 17, 2007

What is SpeakRight?

SpeakRight is based around the flow object which has a similar role to controls in GUI frameworks. A flow object manages presentation (prompts, grammars, and retry logic), and control flow. In MVC terms, a flow object is both the view and the controller. Flow objects can be customized (by setting properties), or extended using sub-classing. SpeakRight provides built-in objects for standard data types (time, date, alphanum), and for standard flow algorithms (forms, menus, list traversal, confirmation).

Flow objects can contain sub-flow objects. This nesting continues all the way out to a single application flow object. At runtime SpeakRight executes the application flow object. It may decide that the first thing to do is to execute a sub-flow. The sub-flow may execute its sub-flow. And so on. Nesting continues until a leaf flow object that actually produces VoiceXML content is encountered. At this point, a flow stack has been built up of all the active flow objects. The topmost item in the stack is the leaf. Only one flow object executes at a time. SpeakRight persists itself across HTTP requests (using serialization) . The results of VoiceXML page arrive back at the servlet as a new HTTP request. SpeakRight gives the results to the currently executing flow object. It can do one of three things:

- execute again, and produce another VoiceXML page

- return null to indicate it has finished. It will be popped off the flow stack and the next flow object will be executed.

- "throw" an event. SpeakRight uses a Throw/Catch style of event handling. A thrown event causes a search down the stack looking for a handler.

SpeakRight prompts use a powerful formatting system. A prompt string such as "The current interest rate is {$M.rate} percent. Please wait while we get your portfolio {..}{music.wav}" represents:

- A TTS (text-to-speech) utteran "The current interest rate is"

- a model value

- more TTS "percent. Please wait while we get your portfolio.."

- silence (500 msec)

- audio file

SpeakRight grammars are... GRXML files. I've totally punted in this early version. Dynamic and GSL prompts later, but for now, get the wonderful Grammar Editor that comes (free) with the Microsoft Speech Application SDK (SASDK for MSS 2004 R2).

MVC architecture requires a Model and SpeakRight has one. The model is used to share data between flow objects. User input (or data retrieved from a database) that needs to be used later in the call flow should be put into the model. SpeakRight provides binding so this occurs automatically. In SpeakRight you generate the model using code generation -- a tool called MGen. You write a simple XML file specifying your model fields (such as "Date departureDate") and MGen generates a type-safe Model.java file. Your application can now use intellisense (called CodeAssist in Eclipse) to inspect the model variables. Each model variable has get, set, and clear methods.

Applications need to have side effects. Their purpose is to interact with some back-end database to transfer funds, buy a train ticket, or vote. SpeakRight calls these transactions. Each flow object, upon finishes, can invoke a transaction. If the transaction is invoked asynchronously, SpeakRight pauses the callflow until the transaction completes.

Of course, before we accept data we need to validate it. Flow objects have a ValidateInput method that is called whenever user input has occurred. The method can accept or reject the input. If input is rejected, the flow object is executed again.

Another part of speech recognition is confirmation. This is done whenever the recognition confidence level is below a confirmation level. SpeakRight uses pluggable confirmation strategies. Some of the strategies are: None, YesNo, ConfirmAndCorrect, and ImplicitConfirm. You can basically plug any confirmation strategy into any flow object.

Finally, and most importantly, SpeakRight provides an extensible architecture for re-usable components called SRO (SpeakRight Objects). These speech objects provide standard mechanisms for common tasks. There are (to come) SROs for input of common data types such as time, date, currency, and zip code. There are also SROs for common control flow algorithms such as list traversal. SROs are highly configurable: prompts and grammars can be customized or replaced. Prompts can be customized at several levels, including pluggable formatters for rendering model values.

Re-usability is a key technical challenge in the project. It should be easier (and better) to re-use existing objects than rolling your own. Prompts, for example, need to be highly flexible. Users do not like prompts that switch back and forth between TTS and pre-recorded audio. A single voice is important. SpeakRight provides an audio matching feature, where all prompt segments are looked up in an audio match XML file that contains a replacement audio file for that segment. Of course, prompt coverage tools are needed to help build the list of prompts to be sent for professional recording. These tools are (cough) TBD.

Tutorial 2 - Adding a Menu

Let's add a menu to our Hello app.

add the menu class to app1 (as inner class)

here's the grxml file

import org.speakright.core.*;

public class App1 extends SRApp {

public App1(){super("app1"); //give the app a name (useful for logging);

add(new PromptFlow("Welcome to SpeakRight!"));

}

}

This flow will simply say "Welcome to SpeakRight!" and hang up.

There are a number of ways to run your app. Eventually you'll embed it in a Java servlet and point a VoiceXML browser at it. But for now, let's use the ITest console utility that comes with SpeakRight. Switch back to Hello.java, which should have been created when you made the project.

import org.speakright.itest.*;

public class SRHello {public static void main(String[] args){

System.out.println("SRHello");

SRInteractiveTester tester = new SRInteractiveTester();

App1 app = new App1();

tester.run(app);

System.out.println("Done.");}

}

OK. Let's run the app. In the Package Explorer, find your class file Hello.java, right-click and select Run As and Java Application. ITest runs in the java console. You should see this in the Console view

The "1>" indicates this is the first turn. That is, your app is about to create it's first VoiceXML page. Type "go" and press Enter. You'll see some logging and the VoiceXML:

When a SpeakRight app is in a java servlet, this VoiceXML would be returned to the VoiceXML server, where it would be executed. The results of that execution would be sent back to SpeakRight. In this case, the page simply plays some text-to-speech audio, so there is no user input to send back. In ITest, we are the VoiceXML platform and we send back (using the "go" command) the results of executing the most recent page. So type "go" and press Enter again. The app has nothing more to do so it finishes, along with ITest.

That's it. You've created your first SpeakRight app.

Tutorial 1 - Creating an Hello app

First let's create our flow class. Each app will have a top-level flow object. In the Package Explorer, right-click on your project Hello and select New / Class. Name it App1. Here's the Package Explorer (ignore Model.java for now)

You should then be able to edit the App1.java file, adding the following:

import org.speakright.core.*;

public class App1 extends SRApp {

public App1() {

add(new PromptFlow("Welcome to SpeakRight!"));

}

}

This flow will simply say "Welcome to SpeakRight!" and hang up.

There are a number of ways to run your app. Eventually you'll embed it in a Java servlet and point a VoiceXML browser at it. But for now, let's use the ITest console utility that comes with SpeakRight. Switch back to Hello.java, which should have been created when you made the project.

import org.speakright.itest.*;

public class SRHello {public static void main(String[] args) {

System.out.println("SRHello");

SRInteractiveTester tester = new SRInteractiveTester();

SRFactory factory = new SRFactory();

SRRunner runner = factory.createRunner("", "", "", null);

App1 app = new App1();

tester.run(run, app);

System.out.println("Done.");}

}

For a simple app we pass empty strings to createRunner. For more information on the parameters to pass to createRunner, see Initialization.

OK. Let's run the app. In the Package Explorer, find your class file Hello.java, right-click and select Run As and Java Application. ITest runs in the java console. You should see this in the Console view

The "1>" indicates this is the first turn. On each turn your app will create a VoiceXML page. Type "go" and press Enter. You'll see some logging and the VoiceXML:

When a SpeakRight app is in a java servlet, this VoiceXML page would be sent to the VoiceXML server (as part of an HTTP reply). The server would execute the page, and send back the results (as a new HTTP request) . In ITest, we take the place of the VoiceXML server. In the "go" command we specify the results of executing the most recent page. In this case, the page is output only; it only plays text-to-speech audio, so there is no user input to send back. So type "go" and press Enter again. The app has nothing more to do so it finishes, along with ITest.

That's it. You've created your first SpeakRight app. To see how a fully-functioned app is written see Simpsons Demo, or get more documentation at Table Of Contents.Getting Started

After reading What is SpeakRight and perhaps some other articles in Table of Contents, you're ready to install and try it out.

Installation

Download the code from here. I used Eclipse 3.2.1 which uses Java 1 (jre/jdk) 1.5.0.11 and Tomcat 5.5.

You'll also need

- log4j 1.2.8 (comes with Eclipse)

- StringTemplate 3.0 from here which includes antlr 2.7.7

This tutorial assumes you are using Eclipse 3.2.1. Any Java IDE should work.

Start Eclipse and select File / New / Project and select New Java Project. Name it "SRHello".

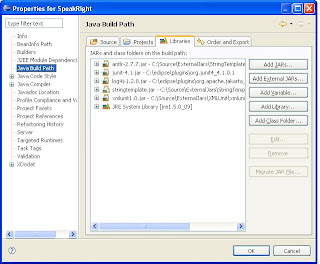

In the Package Explorer (on the left-hand side of your screen), right-click and select Properties. Select Java Build Path and then the Libraries tab.

Select Add External Jars and add SpeakRight, Log4J, StringTemplate, and antlr. Here's the dialog box. Ignore JUnit and XMLUnit; they're not needed at this time.

Now we're ready to write some code! On to the SRHello app.

Frequently Asked Questions

What is SpeakRight?

SpeakRight is an open-source Java framwork for writing speech recognition applications in VoiceXML.SpeakRight apps live inside a web server, such as a JSP servlet. The SpeakRight runtime executes a set of flow objects, and generates VoiceXML pages, one a time. SpeakRight is stateless so it can work across multiple HTTP requests.

How is it licensed?

SpeakRight is licensed under the OSS-approved Eclipse Public License (EPL). Under this license anyone can download and use SpeakRight. There are no license fees for use within a commercial product. EPL's main restriction is that if you modify SpeakRight source files and distribute them, then you must release those files under the EPL. Of course, your own source files (that may extend or use SpeakRight classes) are excempt.

Where does the name SpeakRight come from?

The name comes from the term "speak right into the microphone". Interviewers used this phrase in the early days of TV. People being interviewed didn't know how to behave with this new technology, and often froze on camera. The goal of the SpeakRight Framework is to help make people comfortable with with speech recognition applications.

Why use SpeakRight?

I have written a number of speech apps on different SALT and VoiceXML platforms. After trying a number of different approaches (raw VoiceXML, drag-and-drop toolkits), I came to believe that the complexity of speech application VUIs required a code-based approach (see Benefits of a Code-Based Approach)

What's the current status?

The current status is alpha. Single-slot questions are supported, with NBest support. Audio can be audio files, TTS, or generated values (such as today's date, or an application variable). Inline, built-in, and external grammars are supported. Also supported: control flow, event handling, the model, DTMF-only-mode, and confirmation. Transfer and Record too.

Testing can be done from JUnit, a console app, from a web browser (apps can generate an HTML version of themselves), or from a VoiceXML platform.

Not supported: mixed initiative, hot words (link tag).What platforms does it run on?

Currently has only been tested on Voxeo's free site. SpeakRight uses a template engine (see String Template template engine) so changing the output is often as easy as changing the template file.

What is SpeakRight?

SpeakRight is an open-source Java framework for writing speech recognition applications in VoiceXML.Unlike most proprietary speech-app tools, SpeakRight is code-based. Developers write their application in Java using SpeakRight classes and their own extensions. Java IDEs such as Eclipse provide great debugging, and fast Java-aware editing and refactoring.

The basic class is the flow object which has a similar role to controls in GUI frameworks. A flow object manages presentation (prompts, grammars, and retry logic), and control flow. In MVC terms, a flow object is both the view and the controller. Flow objects can be customized (by setting properties), or extended using sub-classing. SpeakRight provides built-in objects for standard data types (time, date, alphanum), and for standard flow algorithms (forms, menus, list traversal, confirmation). Your application can combine and customize these built-in flows, or create your own.

Features

- VoiceXML 2.1 (partial support currently, more to come. See VoiceXML Tags Supported)

- Inline, built-in, and external grammars (GSL and GRXML).

- Prompts can be TTS, audio, or rendered data values. External prompts in XML files for multi-lingual apps and post-deployment flexibility.

- Built-in support for noinput, nomatch, and help events. Escalated prompts.

- Built-in validation. A flow object's ValidateInput method can validate user input and accept, ignore, or retry the input.

- NBest with confirmation of each NBest result. Optional skip list.

- A library of re-usable "speech objects", called SROs is provided for common tasks such as time and dates, numbers, and currency.

- Disconnect, Transfer, and GotoUrl

- Flow Objects. A flow object represents a dialog state such as asking for a flight number. Each flow object is rendered as one or more VoiceXML pages. Flow objects are fully object-oriented: you can use inheritance, composition, and nesting to combine flow objects. The speech application itself is a flow object.

- Throw/catch used for errors such as max-retries-exceeded, validation-failed, or user-defined events. This simplifies callflow development because it encourages centralized error handling (although local error handling can be done when needed). Also, throw/catch increases software re-use because (unlike a 'goto') it decouples the part of the app throwing the error from the part of the app that handles it.

- MVC architecture. Built-in model allows sharing of data between flow objects.

- Flow objects can invoke business logic upon completion.

- Extension points are available in the framework for customization.

- callflows can be unit tested in JUnit

- interactive tester for executing callflows using the keyboard.

- SpeakRight servlets have an HTML output mode. This can be used to test a callflow using an ordinary web browser.

SpeakRight doesn't provide features for data access or web services. Other open-source projects already do those things very well.